使用 ansible 部署 zookeeper 环境 项目地址

zookeeper 依赖 java ,所以安装 zookeeper 前要前确定有没有java,测试方法就是在命令行中运行 java, 看能不能找到命令.如果没有安装java(jdk)环境的话,可以参考java(jdk) 环境 , 如果使用 docker ,可以直接获取一个具有 ssh 和 java 环境的镜像,这里用我自己提交的 waterandair/sshd 镜像,具体操作如下:

创建容器 (用虚拟机或服务器可忽略) 拉取基础镜像 获取一个具备 ssh和java 的 ubuntu linux关于docker的基本使用 )

1 docker pull waterandair/jdk

创建基础容器(数量随机,学习中建议 3 个) 1 2 3 4 5 # 登录名/密码: root/root docker run -d --name=zookeeper1 waterandair/jdk docker run -d --name=zookeeper2 waterandair/jdk docker run -d --name=zookeeper3 waterandair/jdk ...

初始化 role 在任意目录(这里以test文件夹为例)下执行 ansible-galaxy init zookeeper ,初始化一个 roles

设置要用到的变量 在 test/zookeeper/defaults/main.yml 中设置变量

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 --- # defaults file for zookeeper # 版本 zookeeper_version: 3.4.10 # 下载地址 zookeeper_download_url: "http://mirrors.shu.edu.cn/apache/zookeeper/stable/zookeeper-{{ zookeeper_version }}.tar.gz" # 下载到本地的 ~/Downloads zookeeper_download_dest: ~/Downloads/zookeeper-{{ zookeeper_version }}.tar.gz # 安装目录 install_dir: /usr/local/ # 软连接地址 soft_dir: "{{ install_dir }}zookeeper" # zookeeper 快照文件目录 zookeeper_data_dir: /var/zookeeper/ # 设置环境变量的文件 env_file: ~/.bashrc

准备 zookeeper 配置文件的模板 先了解一下集群的集中模式

集群的模式 zookeeper 有三种集群模式,配置简单,只需要在配置文件中修改集群中节点列表就可以,示例:

集群模式: 1 2 3 4 5 6 # zoo.cfg # 省略其他配置项...... server.1=172.17.0.2:2888:3888 server.2=172.17.0.3:2888:3888 server.3=172.17.0.4:2888:3888

伪集群模式: 在单节点运行多个 zookeeper 服务, 端口设置为不同的端口

1 2 3 4 5 6 # zoo.cfg # 省略其他配置项...... server.1=172.17.0.2:2888:3888 server.2=172.17.0.2:2889:3889 server.3=172.17.0.2:2890:3890

单机模式: 1 2 3 4 # zoo.cfg # 省略其其他部分...... server.1=172.17.0.2:2888:3888

修改模板文件 在 test/zookeeper/templates/ 目录下新建文件 zoo_sample.cfg.j2

这里主要配置zookeeper快照目录 data_dir 和 zookeeper 集群节点列表

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 # The number of milliseconds of each tick tickTime=2000 # The number of ticks that the initial # synchronization phase can take initLimit=10 # The number of ticks that can pass between # sending a request and getting an acknowledgement syncLimit=5 # the directory where the snapshot is stored. # do not use /tmp for storage, /tmp here is just # example sakes. # zookeeper 的快照文件目录 dataDir={{ zookeeper_data_dir }} # the port at which the clients will connect clientPort=2181 # the maximum number of client connections. # increase this if you need to handle more clients #maxClientCnxns=60 # # Be sure to read the maintenance section of the # administrator guide before turning on autopurge. # # http://zookeeper.apache.org/doc/current/zookeeperAdmin.html#sc_maintenance # # The number of snapshots to retain in dataDir #autopurge.snapRetainCount=3 # Purge task interval in hours # Set to "0" to disable auto purge feature #autopurge.purgeInterval=1 {# zookeeper 集群中的所有节点的信息 ansible_play_batch 变量可以获得当前任务中的hosts列表 执行后,形如: server.1=172.17.0.2:2888:3888 server.2=172.17.0.3:2888:3888 server.3=172.17.0.4:2888:3888 #} {% for host in ansible_play_batch %} server.{{ id }}={{ host }}:2888:3888 {% endfor %}

编写 tasks 如果一个 tasks 有很多步骤,可以把它们分置在不同的文件中,最后在 test/z ookeeper/tasks/main.yml 文件中引用他们,这样做方便调试,方便阅读

编写下载过程 把 zookeeper 安装文件下载到控制节点, 然后解压到目标主机指定目录下.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 --- # 把文件下载到本地 - name: 判断 zookeeper 源文件是否已经下载好了 command: ls {{ zookeeper_download_dest }} ignore_errors: true register: file_exist connection: local - name: 下载文件 get_url: url: "{{ zookeeper_download_url}}" dest: "{{ zookeeper_download_dest }}" force: yes when: file_exist|failed connection: local # 解压到远程服务器的安装目录 - name: 解压 unarchive: src: "{{ zookeeper_download_dest }}" dest: "{{ install_dir }}" become: true

编写配置和启动过程 在 test/zookeeper/tasks/ 下新建文件 init.yml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 --- - name: 创建软连接 file: src: "{{ install_dir + 'zookeeper-' + zookeeper_version}}" dest: "{{ soft_dir }}" state: link - name: 设置zookeeper环境变量 lineinfile: dest: "{{env_file}}" insertafter: "{{item.position}}" line: "{{item.value}}" state: present with_items: - {position: EOF, value: "\n"} - {position: EOF, value: "{{ 'export ZOOKEEPER_HOME=' + soft_dir }}"} - {position: EOF, value: "export PATH=$ZOOKEEPER_HOME/bin:$PATH"} #- name: 使设置的环境变量生效 # shell: source {{env_file}} # args: # executable: /bin/bash - name: 设置配置文件 template: src: zoo_sample.cfg.j2 dest: "{{ soft_dir + '/conf/zoo.cfg' }}" - name: 设置服务器标识(重要) lineinfile: dest: "{{zookeeper_data_dir + 'myid'}}" line: "{{ id }}" create: yes state: present # 这里启动需要指定 zookeeper 和 java 的路径 - name: 启动 zookeeper 服务器 shell: zkServer.sh start environment: PATH: /usr/local/zookeeper/bin:/usr/local/java/bin:/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin

合并所有tasks 在 test/zookeeper/tasks/ 下修改 main.yml 文件

1 2 3 4 5 6 --- # tasks file for zookeeper # - import_tasks: download.yml #### - import_tasks: init.yml

编写 hosts 文件 在 test/ 目录下新建文件 hosts

1 2 3 4 5 # 这里的id很重要,用于配置zookeeper在集群中的标识 [test] 172.17.0.2 id=1 172.17.0.3 id=2 172.17.0.4 id=3

编写启动文件 在 test/ 目录下新建 run.yml 文件

1 2 3 4 - hosts: test remote_user: root roles: - zookeeper

执行 在 test/ 目录下执行

1 2 3 # ansible 是通过 ssh 执行命令的,所以最佳实践中推荐的是设置 ssh 免密码登录 # 如果没有设置,而已在执行命令后加 -k 参数,手动输入密码, 这里 root 用户的密码是 root ansible-playbook -i hosts run.yml -k

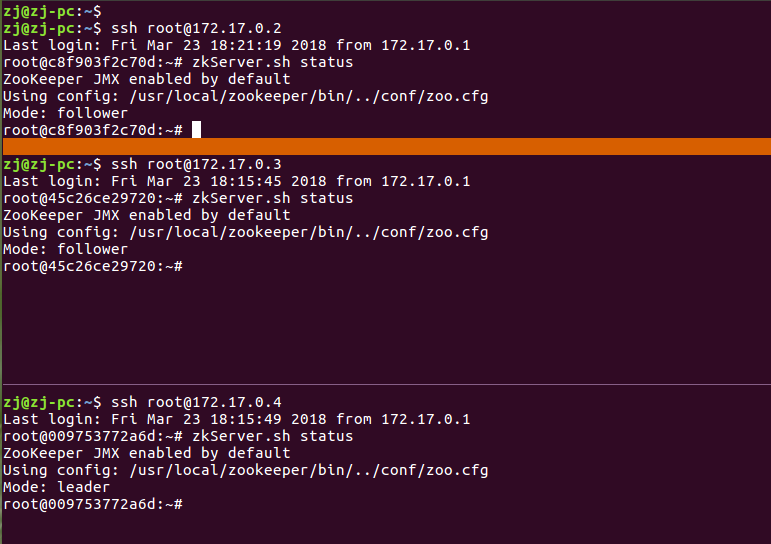

测试 安装完成后,可以ssh登录集群上的服务器,执行 zkServer status 查看 zookeeper 服务运行的情况,注意查看 mode 项, 集群模式下, zookeeper 会自动选择一个节点为 leader, 其余节点为 follower

常用操作 客户端常用操作 启动客户端 shell> bin/zkCli.sh

显示所有操作命令 zookeeper> help

查看当前znode中所包含的内容 zookeeper> ls /

查看当前节点数据并能看到更新次数等数据 1 2 3 4 5 6 7 8 9 10 11 12 13 14 zookeeper> ls2 / [zk: localhost:2181(CONNECTED) 2] ls2 / [zookeeper] cZxid = 0x0 ctime = Thu Jan 01 08:00:00 CST 1970 mZxid = 0x0 mtime = Thu Jan 01 08:00:00 CST 1970 pZxid = 0x0 cversion = -1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 0 numChildren = 1

创建普通节点 1 2 3 4 zookeeper> create /test1 "this is test1" Created /test1 zookeeper> create /test1/server-01 "127.0.0.1" Created /test1/server-01

获得节点的值 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 zookeeper> get /test1 this is test1 cZxid = 0x10000000a ctime = Mon Aug 20 18:05:42 CST 2018 mZxid = 0x10000000a mtime = Mon Aug 20 18:05:42 CST 2018 pZxid = 0x10000000b cversion = 1 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 13 numChildren = 1 zookeeper> get /test1/server-01 127.0.0.1 cZxid = 0x10000000b ctime = Mon Aug 20 18:06:28 CST 2018 mZxid = 0x10000000b mtime = Mon Aug 20 18:06:28 CST 2018 pZxid = 0x10000000b cversion = 0 dataVersion = 0 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 9 numChildren = 0

创建临时节点 1 2 3 4 5 6 7 8 9 10 11 zookeeper> create -e /test-emphemeral "this is emphemeral znode" Created /test-emphemeral zookeeper> ls / [zookeeper, test1, test-emphemeral] zookeeper> quit # 客户端退出后重新连接服务器,发现临时节点已经删除 zookeeper> ls / [zookeeper, test1]

创建带序号的节点 1 2 3 4 5 6 zookeeper> create -s /test1/a 10 Created /test1/a0000000001 zookeeper> create -s /test1/b 10 Created /test1/b0000000002 zookeeper> create -s /test1/c 10 Created /test1/c0000000003

修改节点数据值 set /test1 "updated value"

节点的值变化监听 1 2 3 4 5 6 7 8 # 打开 客户端1, 在主机上注册监听 /test1 节点数据变化 zookeeper1> get /test1 watch # 打开 客户端2, 修改 /test1 的值 zookeeper2> set /test1 "change data" # 观察 客户端1 收到数据变化的监听 WATCHER:: WatchedEvent state:SyncConnected type:NodeDataChanged path:/test1

节点的子节点变化监听(路径变化) 1 2 3 4 5 6 7 8 9 10 11 12 # 打开 客户端1,注册监听 /test1 节点的子节点变化 zookeeper> ls /test1 watch [server-01, b0000000002, a0000000001, c0000000003, a0000000004] # 打开 客户端2,在 /test1 下创建子节点 zookeeper> create /test1/test "test" Created /test1/test # 观察 客户端1 收到子节点变化的监听 WATCHER:: WatchedEvent state:SyncConnected type:NodeChildrenChanged path:/test1

删除节点 zookeeper> delete /test1/test

递归删除节点 zookeeper> rmr /test2

查看节点状态 1 2 3 4 5 6 7 8 9 10 11 12 zookeeper> stat /test1 cZxid = 0x10000000a ctime = Mon Aug 20 18:05:42 CST 2018 mZxid = 0x100000022 mtime = Mon Aug 20 18:32:06 CST 2018 pZxid = 0x100000023 cversion = 6 dataVersion = 5 aclVersion = 0 ephemeralOwner = 0x0 dataLength = 12 numChildren = 6